GitLab Code Quality Duplication Analysis With PMD CPD

This post describes an alternative to the built-in GitLab Code Quality duplicate or similar code analysis, which uses Code Climate. It details four significant issues observed with the Code Climate analysis and an assessment of alternative tools for duplication analysis. This lead to the creation of a new project using PMD Copy/Paste Detector (CPD) that returns a GitLab Code Quality formatted report.

Issues with Code Climate duplication analysis #

Experience with the built-in GitLab Code Quality duplication analysis, performed by Code Climate, has shown the results to have several categories of issues. Before diving into them, the following is a brief summary of how that analysis is performed. Per the Code Climate documentation, the code is parsed into an abstract syntax tree (AST), and each node of the tree is given a mass based on the number of sub nodes. Code Climate compares each node to other nodes to find duplicates, and reports those greater than the applicable mass threshold (duplicate code or similar code). Nodes that have the same structure and values are duplicate code, and those with the same structure but different values are similar code.

The following sections detail four categories of incomplete, incorrect, or non-intuitive results. These sections compare some simplified JavaScript code samples for brevity, and where appropriate uses artificially low thresholds to find duplicate code.

Inaccurate mass calculation #

There are cases where the duplicate mass reported by Code Climate is not accurate. For example, given the following code:

export const anotherFunction = (value) => {

if (value) {

console.log(`value: ${value}`);

} else {

console.log('nada');

}

};

const function1 = () => {

const a = [1, 3, 5];

console.log(`total: ${a.length}`);

for (let i = 0; i < a.length; i++) {

if (a[i] < 5) {

console.log(a[i]);

}

// output error if value equal to 5

if (a[i] === 5) {

console.log('error');

}

}

};

const function2 = () => {

const a = [2, 4];

console.log(`total: ${a.length}`);

for (let i = 0; i < a.length; i++) {

// log if less than 5

if (a[i] < 5) {

console.log(a[i]);

}

if (a[i] === 5) {

console.log('error');

}

}

};Code Climate reports the following mass values:

- The complete function

anotherFunction(highlighted in yellow) is reported to have a mass of 58 (seems correct). - Part of the function

function1(theforloop highlighted in blue) is reported to have a mass of 10 (definitely incorrect). - Part of the function

function2(theifstatement highlighted in yellow), which is a subset of thefunction1forloop, is reported to have a mass of 19 (seems correct).

This disparity makes it challenging to impossible to trust the results, and certainly impossible to accurately tune the mass threshold to get the desired results. Testing has confirmed these mass values are being used to filter results and are not just a report display error.

Adjacent duplicates are not grouped #

As noted previously, the code duplication algorithm looks at the tree of nodes in the AST representation of the code. This means that if adjacent lines of code are duplicates, but do not have a common parent node that includes only the duplicate code, they are reported separately. For example, given the following code:

const function1 = () => {

const a = [1, 3, 5];

console.log(`total: ${a.length}`);

for (let i = 0; i < a.length; i++) {

if (a[i] < 5) {

console.log(a[i]);

}

// output error if value equal to 5

if (a[i] === 5) {

console.log('error');

}

}

};

const function2 = () => {

const a = [2, 4];

console.log(`total: ${a.length}`);

for (let i = 0; i < a.length; i++) {

// log if less than 5

if (a[i] < 5) {

console.log(a[i]);

}

if (a[i] === 5) {

console.log('error');

}

}

};Code Climate reports two sets of duplicates: the individual console.log lines

as one (highlighted in yellow), and the complete for loop as the other

(highlighted in blue).

In addition, depending on the code in each adjacent duplicate group, the mass of

the combined code may be reportable as a duplicate, but the mass of each

individual line may not be, in which case the duplication is not reported at

all. This is illustrated in the following example, where five lines in each

function are exact duplicates, but function2 has an additional console.log

line at the start.

const function1 = async (x) => {

const url = `https://somewhere.test/${x}`;

console.log("Fetching result for:", url);

const response = await fetch(url);

const result = await response.json();

console.log("result:", result);

};

const function2 = async (x) => {

console.log("Checking ID: ", x);

const url = `https://somewhere.test/${x}`;

console.log("Fetching result for:", url);

const response = await fetch(url);

const result = await response.json();

console.log("result:", result);

};Per Code Climate's algorithm, each of the five duplicate lines has a mass 12 - 16, with a total mass of 69. With the default JavaScript mass threshold of 45, Code Climate reports no duplicate code, even though the collection of adjacent lines together exceeds that threshold. The mass threshold would need to be reduced to 12 to catch the duplication in individual lines, and then Code Climate reports duplicates for each of the five lines separately.

Unidentified duplicates #

There are cases where the Code Climate duplication algorithm simply misses duplicates. For example, given the following code, which has the same function copied three times, each with the function/variables renamed and different comments:

const badWords = ['badword1', 'badword2', 'badword3'];

const function1 = (value) => {

if (badWords.includes(value)) {

console.log('***');

} else {

console.log(value);

}

};

const function2 = (input) => {

if (badWords.includes(input)) {

// Redact bad words

console.log('***');

} else {

console.log(input);

}

};

const function3 = (something) => {

if (badWords.includes(something)) {

console.log('***');

} else {

console.log(something);

}

};Code Climate reports the two highlighted functions (the first and third) as duplicates, but the middle function is not identified as a duplicate. It's possible the comment in the middle is impacting the results, but there are other examples where this is not the case (for example, here).

Noisy similar code results #

Given the definition of similar code, Code Climate frequently reports code that is generally similar, and unlikely to be refactored. For example, given the following code:

const function1 = () => {

const a = [1, 3, 5];

console.log(`total: ${a.length}`);

for (let i = 0; i < a.length; i++) {

if (a[i] < 5) {

console.log(a[i]);

}

// output error if value equal to 5

if (a[i] === 5) {

console.log('error');

}

}

};

const function2 = () => {

const a = [2, 4];

console.log(`total: ${a.length}`);

for (let i = 0; i < a.length; i++) {

// log if less than 5

if (a[i] < 5) {

console.log(a[i]);

}

if (a[i] === 5) {

console.log('error');

}

}

};Code Climate reports the four highlighted if statements as the same similar

code. In this case it's unlikely that anyone would refactor the if statements

to reduce duplication, especially the different comparison operators, so the

similar code results can be more noisy than useful.

Alternate tool options #

Give the Code Climate issues previously discussed, a search was done looking for alternative tools. The criteria for the tools were left as vague as practical:

- Tools needed to support most, if not all, of the languages that Code Climate currently supports (most was left undefined, but at least JavaScript/TypeScript and Go, since that's what I write).

- Tools should detect duplicate code, where duplicate code should exclude spacing, comments, and variable names/values to the greatest degree practical. This terminology is different than Code Climate uses, where some of these cases would constitute similar code.

- The tools had to be actively maintained. That was also a little vague, but to be actively maintained a tool should have a release in the last year (ideally in the last 3 months), commits in the last 3 months, and responses to at least some new project issues.

- The tools must output results in some structured format that can be converted to a GitLab Code Quality report.

Some investigation led to two primary candidate tools warranting further investigation, PMD Copy/Paste Detector (CPD) and jscpd. Both of these tools take a different approach from Code Climate and use variations of the Rabin-Karp algorithm for detecting duplicate code.

PMD Copy/Paste Detector (CPD) #

PMD is primarily a static analysis tool for Java and Apex (and is itself a Java-based tool), although provides some support for 16 other languages. For comparison, it includes 273 rules for Java, but only 17 rules for JavaScript. Included with it is the PMD Copy/Paste Detector (CPD), which checks for duplicate code. PDM CPD supports 31 languages, including all of the languages supported by Code Climate.

jscpd #

jscpd is a JavaScript-based tool to check for duplicate code. It supports over 150 languages, including all of the languages supported by Code Climate.

Comparison #

In general, both tools are capable and produced similar results. Overall, PMD CPD produced more comprehensive results, as illustrated in the following examples. There were three primary areas where jscpd had limitations:

- By design, jscpd only compares two sections of code, so for cases of 3 or more duplications they're reported in pairs. With this, it's not as clear that they're duplicates of the same code. Alternatively, PMD CPD reports all of the duplications together in one group.

- There were some cases where jscpd reported multiple duplicates for the same code where one was a subset of the other. This was more pronounced at lower minimum token thresholds, which was used for many tests to test for the greatest number of duplicates.

- The two machine-readable formats for jscpd did not include the token count for

the duplicates (the XML report doesn't include

tokens, and the JSON report always erroneously reportstokens: 0). Having this information is valuable in adjusting the minimum token thresholds.

The following are a few examples for comparison. As with the previous examples, these include simplified code samples for brevity, and where appropriate use artificially low thresholds to find duplicate code. The specific code identified as duplicate is highlighted in each case.

The first is a JavaScript example with code duplicated 3 times, which finds similar results, but illustrates that this is reported as different duplicates by jscpd. It also shows where PMD CPD tended to include more code as part of the duplicate.

PMD CPD results

const badWords = ['badword', 'badword2',

'badword3'];

const myFunction = (value) => {

if (badWords.includes(value)) {

console.log('***');

} else {

console.log(value);

}

};

const myFunction2 = (input) => {

if (badWords.includes(input)) {

// This is the same as the previous one

console.log('***');

} else {

console.log(input);

}

};

const myFunction3 = (something) => {

if (badWords.includes(something)) {

console.log('***');

} else {

console.log(something);

}

};jscpd results

const badWords = ['badword', 'badword2',

'badword3'];

const myFunction = (value) => {

if (badWords.includes(value)) {

console.log('***');

} else {

console.log(value);

}

};

const myFunction2 = (input) => {

if (badWords.includes(input)) {

// This is the same as the previous one

console.log('***');

} else {

console.log(input);

}

};

const myFunction3 = (something) => {

if (badWords.includes(something)) {

console.log('***');

} else {

console.log(something);

}

};const badWords = ['badword', 'badword2',

'badword3'];

const myFunction = (value) => {

if (badWords.includes(value)) {

console.log('***');

} else {

console.log(value);

}

};

const myFunction2 = (input) => {

if (badWords.includes(input)) {

// This is the same as the previous one

console.log('***');

} else {

console.log(input);

}

};

const myFunction3 = (something) => {

if (badWords.includes(something)) {

console.log('***');

} else {

console.log(something);

}

};The second example is in Go, showing similar results (both similar accuracy and limitations).

PMD CPD results

package main

import (

"fmt"

)

func printOdd() {

values := []int{1, 3, 5}

for _, value := range values {

if value == 5 {

fmt.Println("***")

} else {

fmt.Println(value)

}

}

}

func printEven() {

values := []int{2, 4}

for _, value := range values {

if value == 5 {

fmt.Println("***")

} else {

fmt.Println(value)

}

}

}jscpd results

package main

import (

"fmt"

)

func printOdd() {

values := []int{1, 3, 5}

for _, value := range values {

if value == 5 {

fmt.Println("***")

} else {

fmt.Println(value)

}

}

}

func printEven() {

values := []int{2, 4}

for _, value := range values {

if value == 5 {

fmt.Println("***")

} else {

fmt.Println(value)

}

}

}Given all of this data, PMD CPD was chosen for the solution.

The GitLab PMD CPD solution #

This led to the creation of the GitLab PMD CPD project, which includes a container image to run the PMD CPD analysis, and tools to convert the results to a GitLab Code Quality report.

Conversion to GitLab Code Quality report format #

Unfortunately CPM CPD can't accept any kind of template for the output, and from the available options XML is the only structured format. So, a simple Go-based CLI application was created to post-process the XML output, converting it into GitLab Code Quality report formatted JSON. The container image used to run PMD CPD is based on a Java image, and this allowed a binary that could easily be added without requiring extra dependencies.

The GitLab documentation provides details on the required schema for implementing a custom code quality report. This includes the minimum values used by GitLab for displaying the results in the merge request widget or the pipeline view - description, check name, fingerprint, severity, and start line. A review of the GitLab Code Quality and PMD CPD reports showed some other useful data, which was included. An example with the complete final schema is:

[

{

"categories": ["Duplication"],

"check_name": "duplicate-code",

"content": {

"body": " const a = [1, 3, 5];\n console.log(`total: ${a.length}`);\n for (let i = 0; i < a.length; i++) {\n if (a[i] < 5) {\n console.log(a[i]);\n }\n // output error if value equal to 5\n if (a[i] === 5) {\n console.log('error');\n }\n }\n};\n",

"group": 1,

"tokens": 68

},

"description": "Duplicate code found in 2 locations (Duplication 1). Consider refactoring.",

"engine_name": "pmd-cpd",

"severity": "major",

"type": "issue",

"fingerprint": "d0bf6d31894d6482691775a4f031e680dff4a8ab02d2a3d5be036dfdb920002e-1",

"location": {

"path": "internal/testdata/exampleproject/file5.js",

"positions": {

"begin": { "column": 23, "line": 2 },

"end": { "column": 3, "line": 13 }

}

},

"other_locations": [

{

"path": "internal/testdata/exampleproject/file5.js",

"positions": {

"begin": { "column": 20, "line": 16 },

"end": { "column": 3, "line": 27 }

}

}

]

}

]A few changes were made from the original GitLab Code Quality report:

- The

content.bodyvalue includes the duplicate code, whereas the Code Climate report gives a long description on DRY-ness and why duplicate code can be a problem (the same for all duplicates other than the actual mass value). The specific code proved more valuable. - The total

tokensas well as thegroupfor the duplicates were added to thecontentobject. Becausetokensis included, there is noremediation_pointsfield which the Code Climate report provides (although I'm still not sure the value of trying to score the badness of code quality issues). Thegroupis unique to a duplication, and added to differentiate results because each duplicate location is included in the report for each occurrence (aslocationand asother_location). - For simplicity, all

severityvalues aremajor, which seemed consistent with Code Climate results for token thresholds likely to be used in production. The actual Code Climate results, as with the mass threshold variances discussed previously, were an inconsistent mix ofmajor/minor. - The

fingerprintvalue is a SHA256 hash instead of MD5 to avoid any possible collisions. It is also suffixed with the occurrence number of that duplicate. This creates a uniquefingerprintvalue for each instance of each duplicate, which causes all occurrences to be displayed in the GitLab merge request widget. GitLab displays only the last occurrence of each uniquefingerprint, so with the Code Climate report only the last occurrence of each duplication is shown and the report must be opened to find the location of the other occurrences. This implementation causes all occurrences to be shown in the merge request widget with links to the specific code for each.

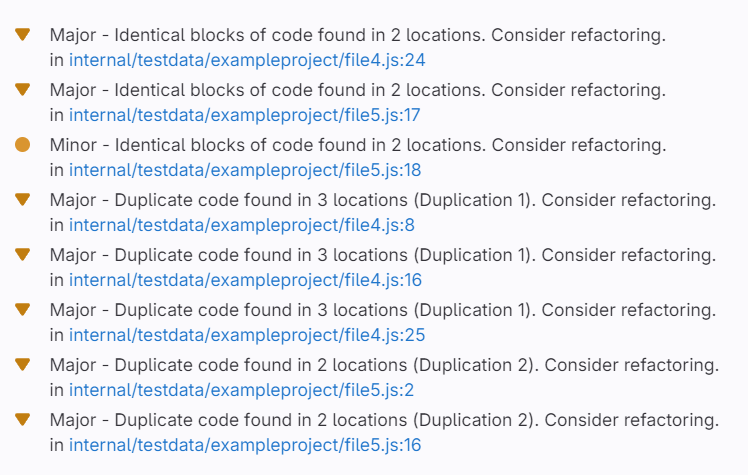

The following example shows the difference in how the results are displayed in GitLab.

The first three are the results from the original Code Climate report (which

show only the last occurrence of each duplicate, and ungrouped duplicates). The

remaining five are from the converted PMD CPD results (which show all

occurrences of each duplicate). These results are from the previous examples

here (file4.js) and

here (file5.js).

Running analyses #

The GitLab PMD CPD container images can be found at

registry.gitlab.com/gitlab-ci-utils/gitlab-pmd-cpd. The image contains all the

tools to run the analysis and report conversion, and a

/gitlab-pmd-cpd/pmd-cpd.sh script as a wrapper to set default values and run

all aspects of the analysis and conversion. The script can be customized with

the following environment variables. Note that the analysis requires specifying

a language, so must be repeated for each applicable language (although that's

easy to automate, as shown in the subsequent GitLab CI example).

PMDCPD_DIR_FILES: The directory or files to scan for duplicate code (default:--dir=.). This must be one of the options--dir,--file-listor--uriwith the appropriate value (for example--file-list=files.txt). Some language-specific examples are shown in the subsequent GitLab CI example. See the PMD CPD docs for additional details.PMDCPD_MIN_TOKENS: The minimum number of tokens to consider a code fragment as duplicate (default:100).PMDCPD_LANGUAGE: The language to use for duplicate code detection (default:ecmascript). The complete list of supported languages can be found here.PMDCPD_RESULTS: The name of the PMD CPD results file (default:pmd-cpd-results.xml). Note that the file extension must be.xmlfor the conversion to GitLab Code Quality report format to work properly, and that the resulting JSON file uses the same base name (for examplepmd-cpd-results.jsonfor the default name).PMDCPD_CLI_ARGS: Any additional PMD CPD command line arguments (default: none). See the PMD CPD docs for additional details.- The

--exclude <path>option can be used to exclude files from the scan. This option can be used multiple times. Note this does not allow globs. - Note that the

--no-fail-on-violation,--skip-lexical-errors=true, and--format="xml"values are fixed in the script.

- The

PMD_JAVA_OPTS: This is used by PMD to set various JVM options, including the heap size. For example,PMD_JAVA_OPTS=-Xmx1024mto set the maximum heap size size to 1 GB.

Not that in an effort to avoid a fight with Oracle, ECMAScript is used instead of JavaScript ®.

Issues with shebang in JavaScript/TypeScript #

When running the CPD analysis, PMD parses each file. Since it is set to ignore

lexical errors, files that fail to parse are ignored (otherwise the analysis

would fail). One issue that was noted with the parser is that it considers a

shebang in JavaScript and TypeScript files to be invalid syntax. This is

typically seen in executable bin files in Node.js projects. There is an

open PMD issue to resolve this, but

until then the pmd-cpd.sh script simply removes the shebang lines before

running the analysis. When running in GitLab CI in a container with an ephemeral

copy of the repository only for this analysis this is not an issue. If the

analysis is run locally, note that it changes these files and does not revert

those changes itself.

GitLab CI jobs #

The following are example GitLab CI jobs to check for duplication in JavaScript, TypeScript, and Go.

.duplication_base:

image:

name: registry.gitlab.com/gitlab-ci-utils/gitlab-pmd-cpd:latest

entrypoint: ['']

stage: test

needs: []

variables:

PMDCPD_MIN_TOKENS: 50

PMDCPD_RESULTS_BASE: 'pmd-cpd-results'

PMDCPD_RESULTS: '${PMDCPD_RESULTS_BASE}.xml'

script:

- /gitlab-pmd-cpd/pmd-cpd.sh

artifacts:

paths:

- ${PMDCPD_RESULTS_BASE}.*

reports:

codequality: ${PMDCPD_RESULTS_BASE}.json

duplication_go:

extends: .duplication_base

variables:

PMDCPD_LANGUAGE: 'go'

PMDCPD_DIR_FILES: '--file-list=go_files.txt'

PMDCPD_RESULTS_BASE: 'pmd-cpd-results-go'

rules:

- exists:

- '**/*.go'

before_script:

- find . -type f -name "*.go" ! -name "*_test.go" > go_files.txt

duplication_js:

extends: .duplication_base

variables:

PMDCPD_LANGUAGE: 'ecmascript'

PMDCPD_CLI_ARGS: '--exclude ./tests/'

PMDCPD_RESULTS_BASE: 'pmd-cpd-results-js'

rules:

- exists:

- '**/*.js'

duplication_ts:

extends: .duplication_base

variables:

PMDCPD_LANGUAGE: 'typescript'

PMDCPD_CLI_ARGS: '--exclude ./tests/'

PMDCPD_RESULTS_BASE: 'pmd-cpd-results-ts'

rules:

- exists:

- '**/*.ts'- The

.duplication_basetemplate sets common defaults, including theimage, defaultvariables,script,artifacts, etc. - As noted previously, the language to check for duplicates must be specified,

so there's a separate job for each language, with

rulesto ensure the applicable jobs run based on file extensions in the repository. - One noteworthy item to be aware of is the different uses of the

PMDCPD_DIR_FILESvariable.- For JavaScript/TypeScript, the default value of

--dir=.is used. This assume a model where test files, for example, are kept in a dedicatedtests/directory, which is excluded with thePMDCPD_CLI_ARGSvariable. If test files are kept with the code, the model used here for Go files should be used. - For Go, tests files are kept with the applicable packages. Since the

--exclude <path>option doesn't accept globs, it can't be used to ignore all*_test.gofiles. So, inbefore_scripta file is created that lists all*.gofiles except the*_test.gofiles. Doing this inbefore_scriptavoids changing the templatescript. The file list is then used for the duplication analysis, specified withPMDCPD_DIR_FILES: '--file-list=go_files.txt'.

- For JavaScript/TypeScript, the default value of

These jobs could be easily extended for other required languages.

Aaron Goldenthal

Aaron Goldenthal